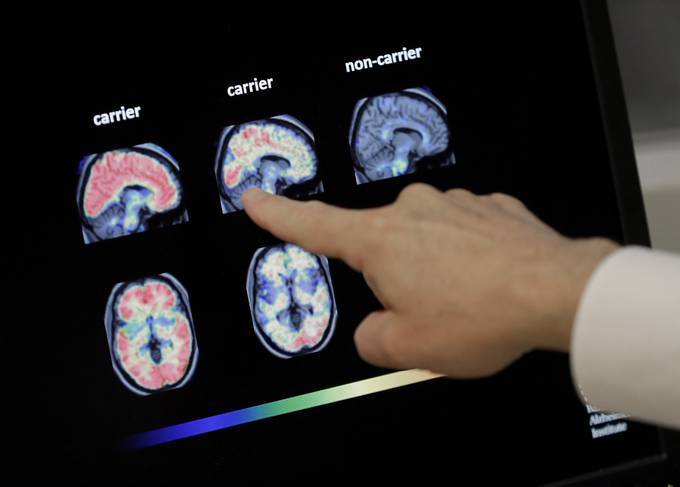

| For four months, Facebook censored claims that the coronavirus originated in a lab in Wuhan, China. But the company has reversed its stance, in a prime example of how the pandemic has intensified the free speech questions already plaguing social media titans. The decision has thrown into sharp relief the challenges of evaluating misinformation amid evolving scientific debates.  Security personnel stand guard outside the Wuhan Institute of Virology. (Hector Retamal/AFP/Getty Images) | Facebook no longer is removing statements that "COVID-19 is man-made or manufactured."The company announced its decision last month amid renewed debate over the origins of the virus. A Facebook representative didn't specify how many such posts were removed, but it's a clear example of the huge power social media companies hold over the information users can see. The company has removed more than 18 million posts that violate its covid-19 or vaccine misinformation policies. Twitter says it has removed more than 8,400 tweets and challenged millions of accounts since introducing its coronavirus guidelines. Those guidelines include a prohibition on attributing the pandemic to "a deliberate conspiracy by malicious and/or powerful forces." But the company would not specify whether it has been deleting claims that the virus originated in a lab. The companies have been under intense pressure from Democrats to rein in false or misleading claims about the virus on their platform. Members of the House Energy and Commerce Committee grilled tech CEOs in March about these efforts, and lawmakers have threatened regulation aimed at scaling back the platforms' liability protections if they don't do more to combat false claims online. But in the case of the lab leak hypothesis, Facebook chose to censor without scientific consensus to back up its decision. Many scientists say that a natural origin of the virus, in which it jumped from animals to humans, is the most likely scenario, but there has been a growing willingness among scientists and reporters to publicly entertain the theory that the virus may have escaped from a lab in China. A letter signed by 18 scientists last month said that both the theory that the virus spilled over from animals and the theory that it escaped from a lab "remain viable." One of the scientists who signed the letter, David Relman, a microbiologist at Stanford University, welcomed Facebook's policy shift, calling the company's initial decision to censor claims that the virus was man-made "a mistake." "I understand that this question [of lab origins] got wrapped up and conflated with a whole lot of other language and messaging that was clearly harmful and ill-intended," Relman said. "It is an example of where in their efforts to clean things up, they may have thrown out the baby with the bathwater." But there's still a fringe theory hanging around.Some conspiracy theorists have claimed the Chinese intentionally engineered the coronavirus to be used as a bioweapon. There is no public evidence that the virus was purposefully created or genetically altered in a lab, and many experts say its features make that very unlikely. Even vocal proponents of the lab leak as a viable hypothesis are quick to dismiss theories that the virus is some sort of engineered bioweapon as far-fetched, but those types of claim could proliferate under Facebook's new policy. Under Facebook's now-reversed policy, the company removed any claims that directly stated or implied that the virus was man-made. A company representative did not specify whether this included claims that the virus escaped from a lab if the claims didn't also say the virus was manipulated in the lab. Facebook's policy shift underscores how hard it can be to determine what's true and what's false."With emerging viruses, we live in a world where our knowledge and understanding can shift on a dime, especially because these viruses tend to spill over unpredictably or we get brand-new ones," said Jason Kindrachuk, a virologist at the University of Manitoba who studies the origin of emerging diseases. Kindrachuk sees the possibility of a lab origin as worthy of investigation, but very unlikely. He worries media coverage is putting theories about a lab origin on the same playing field as what he views as the much more credible scenario of a natural origin. Scientists are trained to talk about caveats and emphasize areas of uncertainty, he says, but that can be used to fuel narratives that are misleading or wrong. "It becomes very, very hard to sort out between conspiracy theories and reasonable and particularly low probability, non-conspiracy explanations for things," said Carl Bergstrom, a University of Washington biology professor who studies infectious diseases and writes about misinformation. One of the challenges, Bergstrom said, is that the platforms fulfill multiple roles. Twitter in particular, he said, has been an important forum for scientific discussions during the pandemic, and that has been facilitated by a robust, open debate. But he also says misinformation can cause immediate real-world harm, especially when it includes prescriptive advice about how to cure or detect the virus or anti-vaccine propaganda. "As a public health person, I'd like to see a platform that is broadly used by many people at least promote strongly evidence-based, consensus-based health advice. As a scientist looking for discussion platform online, I want to see minority views expressed, discussed and shot down," Bergstrom said.  Facebook CEO Mark Zuckerberg testifies before Congress in 2019. (Susan Walsh/AP) | Facebook's decision comes as social media companies face intense pressure to moderate coronavirus posts.Social media companies were already under pressure from lawmakers to remove false or misleading information following allegations of Russian interference in the 2016 elections. Democrats have ramped up that pressure amid the pandemic, even as Republicans have protested that tech companies are biased in the information they choose to fact-check and the conclusions they reach. Studies from advocacy groups, as well as internal research from Facebook itself, suggest that a small number of users account for a disproportionate amount of anti-vaccine content. Tech companies that long refused to wade into the fact-checking business have promised a more aggressive approach in recent years, and some of the changes could be long-lasting. Facebook, for instance, is no longer just censoring misinformation about coronavirus vaccines but has also announced that it will remove false claims about the effectiveness or side effects of other vaccines, including claims that childhood vaccinations cause autism. "Clearly the trajectory over time has been to move away from a hands-off approach," said David Kaye, the former United Nations Special Rapporteur on the promotion and protection of free expression. "The experience of the past year and half dealing with covid and disinformation around covid has probably alerted senior people at these companies to very real ways the platforms can be used or abused to cause offline harm." |

No comments:

Post a Comment